- #WEB SCRAPING USING JAVASCRIPT HOW TO#

- #WEB SCRAPING USING JAVASCRIPT INSTALL#

- #WEB SCRAPING USING JAVASCRIPT PRO#

- #WEB SCRAPING USING JAVASCRIPT CODE#

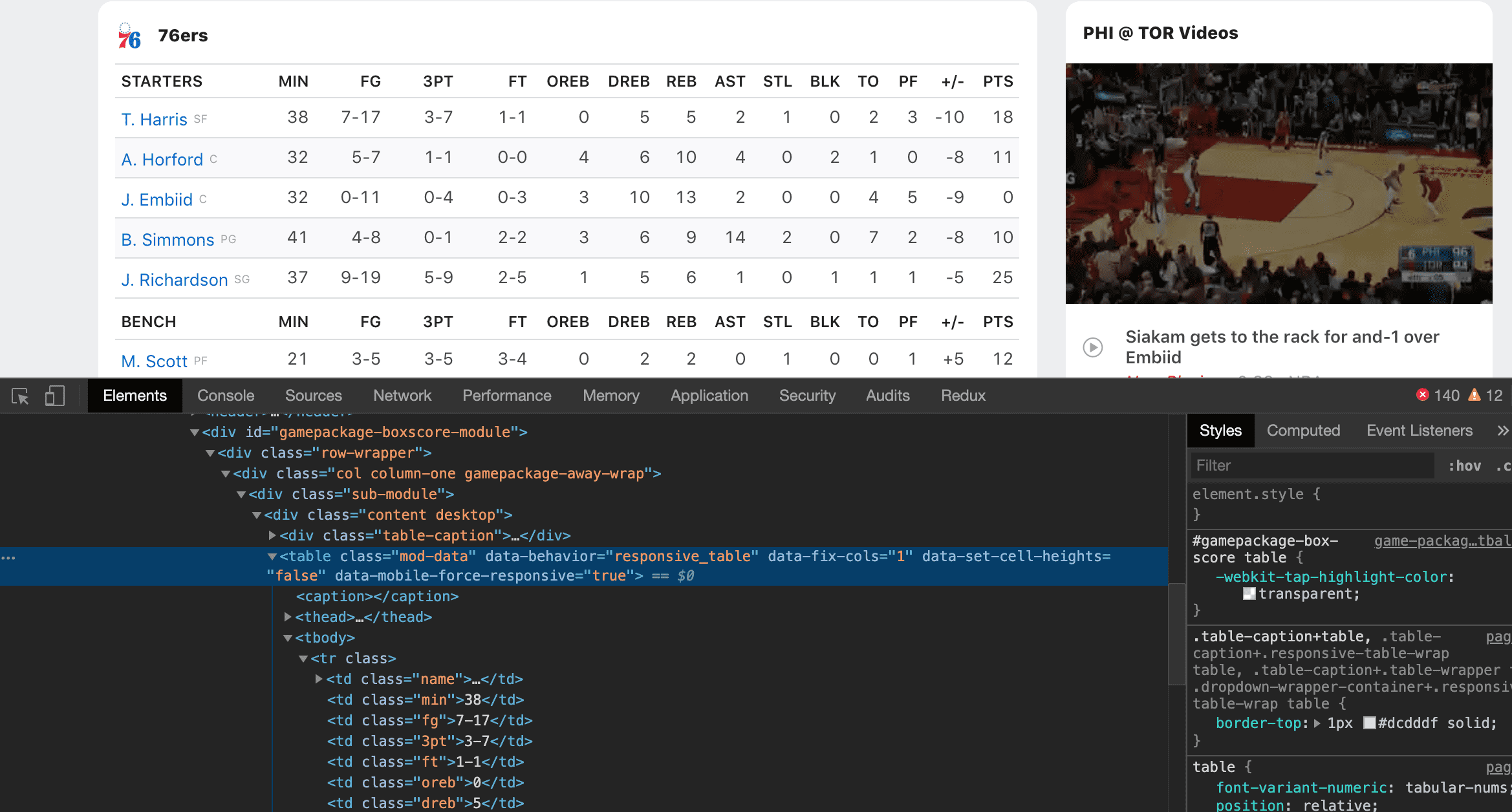

Using Chrome DevTools is easy: simply open Google Chrome, and right click on the element you would like to scrape (in this case I am right clicking on George Washington, because we want to get links to all of the individual presidents’ Wikipedia pages): To do that, we’ll need to use Chrome DevTools to allow us to easily search through the HTML of a web page. Next, let’s open a new text file (name the file potusScraper.js), and write a quick function to get the HTML of the Wikipedia “List of Presidents” page.Ĭool, we got the raw HTML from the web page! But now we need to make sense of this giant blob of text.

#WEB SCRAPING USING JAVASCRIPT INSTALL#

presidents from Wikipedia and the titles of all the posts on the front page of Reddit.įirst things first: Let’s install the libraries we’ll be using in this guide (Puppeteer will take a while to install as it needs to download Chromium as well). We will be gathering a list of all the names and birthdays of U.S.

#WEB SCRAPING USING JAVASCRIPT PRO#

Working through the examples in this guide, you will learn all the tips and tricks you need to become a pro at gathering any data you need with Node.js! This guide will walk you through the process with the popular Node.js request-promise module, CheerioJS, and Puppeteer.

#WEB SCRAPING USING JAVASCRIPT HOW TO#

I will leave you with this example demonstrating how to output a list of all links pointing to PDF files.

#WEB SCRAPING USING JAVASCRIPT CODE#

I am not going to be too specific here because the code is going to differ based on what page you are looking at and what information you are after. Now that we have the ability to grab remote pages and work on them with jQuery the rest is the fun part. This snippet will output the HTML contents of to your shell. Combining the request package with cheerio leads us to this: var request = require('request') You can install it by running npm install request. The last piece of the puzzle is the request package. My first experience was with Cheerio, and getting started was as simple as: var cheerio = require('cheerio') Īfter that, you can use $ the same way you would with jQuery. Npm is the package manager, similar to ruby gem. All three of these can be installed using npm install jquery nquery cheerio. Luckily, there are libraries that make essentially give us jQuery functionality with nodejs. This is a very simple and generic script that will let you grab all HREFs on a given URL that point to a file extension you specify.įor me, jQuery is my go to when I need to write JavaScript. It is a relatively young project but a very exciting one!įor a useful script check out Node.js HREF scraper by Extension.

You can also enter a console shell, just like Python, or the console in browser development tools. With Node.js, you can write scripts in JavaScript just like you would with PHP and Python. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient, perfect for data-intensive real-time applications that run across distributed devices." It is essentially a javascript interpreter for the command line. Node.js is, according to their website, "a platform built on Chrome's JavaScript runtime for easily building fast, scalable network applications.

0 kommentar(er)

0 kommentar(er)